After its launch by OpenAI o1 logic model It takes some time to “think” before responding, Google has now finally released its own version of the thinking model. The new AI model is “Gemini 2.0 Flash Thinking” aka gemini-2.0-flash-thinking-xp-1219. This is an experimental preview model, and is already available AI Studio For testing and feedback.

The Gemini 2.0 Flash Thinking model follows the new paradigm of test-time computation that OpenAI introduced in September. Basically, this allows the model to use more compute resources and time to reevaluate its response before generating the final answer.

In preliminary research, it has been observed that when AI models are given more time to “think” during inference, they perform far better than models trained on larger parameters.

Google has released its first thinking model with small gemini 2.0 flash model, but expect estimate scaling to come in a big way Gemini 2.0 Pro Model (Gemini-XP-1206) Too.

Google says Gemini 2.0 Flash Thinking can solve complex logical questions and difficult math and coding problems. And unlike OpenAI o1, it shows the raw thinking process of the model which is great for transparency.

Not to mention, the new thinking model can process multimodal inputs such as images, videos, and audio files. Lastly, its knowledge cutoff date is August 2024.

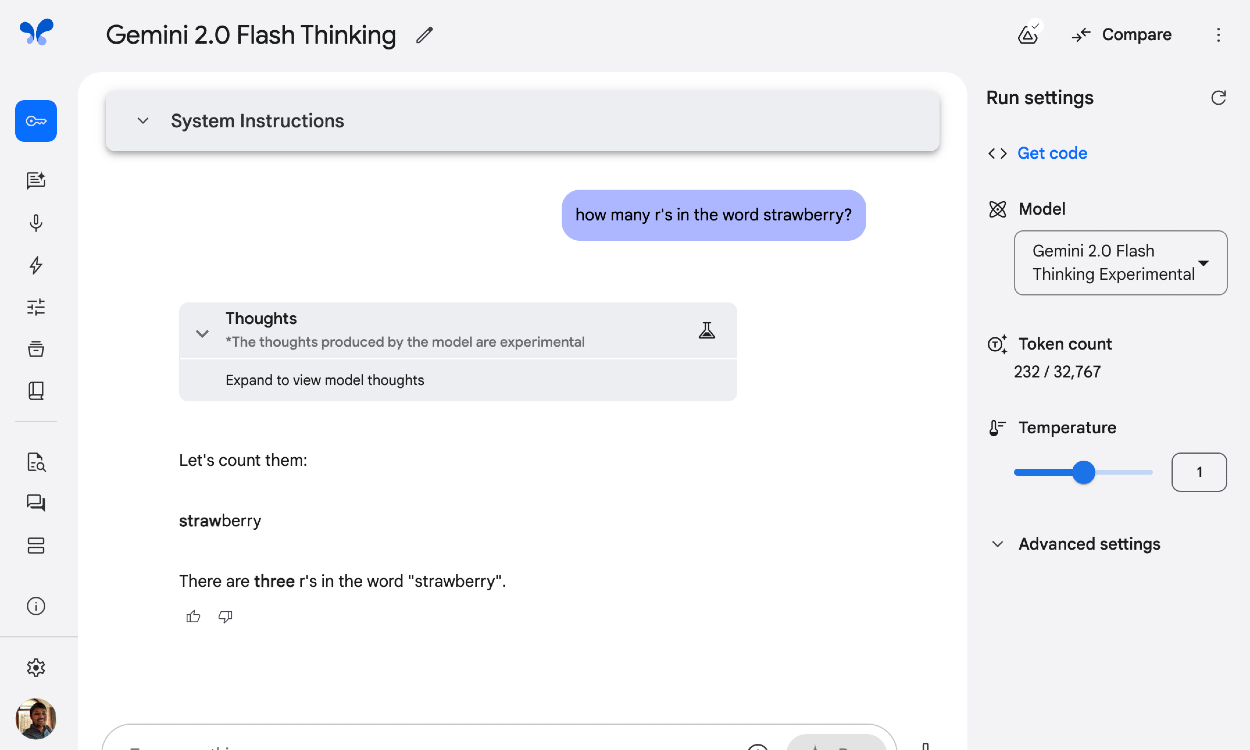

I briefly tested the Gemini 2.0 Flash Thinking Model on AI Studio. This popular strawberry question failed on the first try, but on the next try, it got the answer correct and said there are three R’s in the word “strawberry”. Next, I asked him to find the Indian states which do not have ‘A’ in their name. Again, the answer was wrong.

I think we should wait for the larger Gemini 2.0 Pro Thinking model to deliver stronger performance, and demonstrate the power of inference scaling. Meanwhile, on the LMSYS benchmark, Gemini’s thinking model tops the charts in all categories.